By Captain Shem Malmquist

Please also see my book, written with Roger Rapoport, available via the following link: “Angle of Attack“.

Introduction

Humans are subject to a variety of heuristic biases. These directly impact the decision making process, resulting in incorrect judgments. In most settings this can be relatively harmless, however, for those operating at the sharp end of a highly critical safety position, these biases can be deadly with little time to capture the errors that these biases can lead to. The bias itself impacts the operators perception of reality, creating a very real barrier or screen, which either blocks or filters the true nature of the situation to the individual. These factors can be exacerbated by physiological issues, as well as external influences that become part of the feedback the operator uses in the decision making process.

Cognitive bias effect on human perception

Human perception is a “conscious sensory experience” that utilizes a combination of our senses and our brain to filter and read those sensory inputs (Goldstein, 2010). Research has revealed that there are a number of common ways that our brains can “trick” us into a misconception of the reality or truth of a situation. These biases serve as filters, hindering our ability to make accurate decisions. While some of these biases may not have a large effect on the final outcome of our decision choices in most situations, in certain high risk decision making, the outcome of a decision that is improperly filtered by cognitive bias can be fatal.

Types of cognitive bias

There are many types of cognitive bias that can affect safety of flight. The following are some examples of biases that can be particularly dangerous to flight crews:

Ambiguity effect

An aspect of decision theory where a person is more likely to select something that has an intuitively clear risk as opposed to one that seems relatively less certain. This could lead someone to choosing a more risk option, albeit a more certain risk (Baron and Frisch, 1994) as might be the case when making a decision regarding flying an approach with questionable weather verses diverting to an alternate airport with unknown other issues.

Anchoring bias

A trait where people will make decisions based on a provided reference, for example, if given a baseline of a certain amount of fuel as a requirement, this number will be utilized in determining requirements regardless of whether operational needs might actually require much more, or much less (Kahneman, 2011)

Attentional bias

Humans pay more attention to things that have an emotional aspect to them (Macleod, Mathews and Tata, 1986). In flight operations this could lead to a person making a decision based on perceived threat due to a past experience “thermal scar”. If a pilot had a “scare” due to running low on fuel, they might ignore the risk of severe weather in attempting to avoid repeating the low fuel threat.

Attentional tunneling

Wickens and Alexander (2009) “define this construct as, the allocation of attention to a particular channel of information, diagnostic hypothesis, or task goal, for a duration that is longer than optimal,given the expected cost of neglecting events on other channels, failing to consider other hypotheses, or failing to perform other tasks”. Several well known aircraft accidents have been attributed to attentional tunneling, including the crash of Eastern Airlines 401, which National Transportation Safety Board (NTSB) investigators found descended into the everglades while the crew focused on an inoperative landing gear position light (NTSB, 1972).

Automaticity

While not a bias, this refers to the fact that humans who perform tasks repeatedly will eventually learn to perform them automatically (Pascual, Mills and Henderson, 2001). While generally a positive attribute, this can lead to a person automatically performing a function (such as a checklist item) without actually being cognizant of the task itself. Expectation bias can lead them to assume that the item is correctly configured even if it is not.

Availability heuristic

This describes how people will over-estimate the likelihood of an event based upon the emotional influence the event may have had, or how much personal experience a person may have had with that type of event. This can lead to incorrect assessments of risk, with some events being attributed more risk than they should, and others not enough. (Kahneman, 2011; Carroll, 1978; Schwarz, Bless, Strack, Klumpp, Rittenauer-Schatka and Simons, 1991). An example might be the high salience of the concern over a fuel tank explosion (a very rare event) as opposed to more focus on technology to reduce loss of control inflight events, which the Commercial Aviation Safety Team (CAST) much more likely (CAST, 2003).

Availability cascade

This is a process where something repeated over and over will become to be accepted as a fact (Kuran and Sunstein, 1999). It is very common in politics, so how does it play a role in flight operations? The answer is that the same can be true of misconceptions. A pilot might say “be careful of that airplane, its control surfaces are too small”, and if this is spread enough, other pilots start to just accept it as a fact, and then make inappropriate flight control inputs based on an incorrect mental model of the aircraft dynamics

Base rate fallacy

Historically, the lack of data has resulted in a lack of the ability to see large statistical trends. When a person involved in flight safety planning focuses on specific events, rather than look at the probability over the entire set (ignoring the base rate) the tendency to base judgments on specifics, ignoring general statistical information. This can affect pilots if they are not able to accurately assess the risk of certain decisions (Kahneman, 2011).

Confirmation bias

A common issue in politics, but pervasive across many fields, this describes a situation where a person will ignore facts or information that does not conform to their preconceived mental model, and will assume as true any information that does conform to their beliefs (Nickerson, 1998). This is very dangerous in aviation where a pilot might form an incorrect mental model of their situation and have a very difficult time changing that view even in the face of new information.

Expectation bias

This might be considered a subset of confirmation bias, but describes a situation where a person sees the results they expect to see (Jeng, 2006). Expectation bias is described within the context of several aircraft events in the next section.

Optimism bias

As the name suggests, this is a situation where people are overly optimistic about outcomes (Chandler, Greening, Robison and Stoppelbein, 1999; DeJoy, 1989). It is a common issue in aviation, as pilots have seen so many bad situations turn out “okay” that the sense of urgency and risk can be reduced when such reduction is not warranted.

Overconfidence effect

As the name suggests, there is a strong tendency for people to overestimate their own abilities or the qualities of their own judgments (Dunning and Story, 1991; Mahajan, 1992). This can have fairly obvious implications in flight operations.

Plan Continuation

This might be considered a subset of confirmation bias. There is a strong tendency to continue to pursue the same course of action once a plan has been made (McCoy and Mickunas, 2000), but it may also be influenced by some of the same issues that lead to “sunk cost effect”, where there is a “greater tendency to continue an endeavor once an investment in money, effort, or time has been made”, (Arkes andBlumer, 1985). Plan continuation bias is shown to have been a factor in several events, as outlined in the next section.

Prospective Memory

A common situation where one need to remember to do something that will occur in the future (Dodhia and Dismukes, 2008; West, Krompinger and Bowry, 2005). It can be particularly challenging when faced with distractions of any sort, e.g., a person is driving home from work and needs to stop to pick up milk enroute. If that person then gets a phone call, there is a high probability that they will forget the task of stopping at the store. In aviation, this can lead to many items being missed. A pilot might notice a problem during a preflight inspection and later forget to report the issue to maintenance due to a subsequent distraction.

Selective perception

There is a strong bias to view events through the lens of our belief system (Massad, Hubbard and Newtson, 1979). This is different than expectation bias in that it is generally applied to our perception of information as filtered by our belief system itself, while expectation bias is more generally utilized describing situational awareness based on things we expect to happen. Selective perception can lead to incorrect hypothesis, such as a belief that an event had supernatural causes. In an aircraft, such a belief system can lead to false assumptions.

Accidents and incidents resulting from cognitive bias

Cognitive bias plays a role in most aircraft accidents in one way or another, and each accident will have various factors. Some, like attentional tunneling, confirmation bias, and plan continuation are very common errors of the flight crew, others, such as anchoring bias, would be more likely to be found at the operator level. The following are just a few examples of the ways that cognitive bias can play a role in aircraft accidents and incidents.

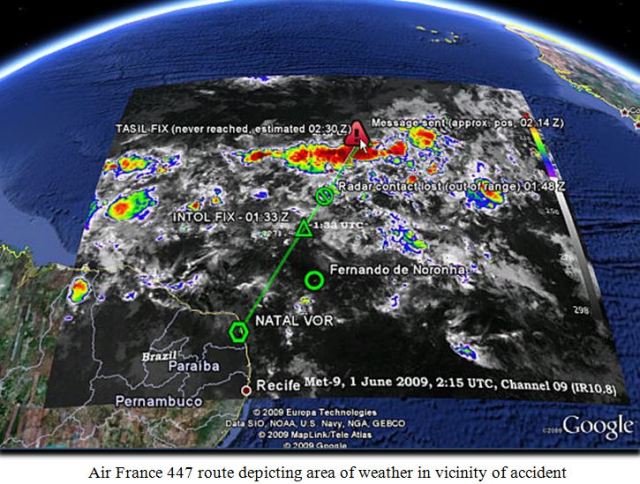

Air France 447

On June 1, 2009, and Airbus A-330, operated by Air France, crashed while crossing the Atlantic ocean on a flight from Rio de Janeiro to Paris. The aircraft was in cruise flight, approaching the intertropical convergence zone. There was a line of thunderstorms ahead. The first officer was the pilot flying, but the aircraft was on autopilot. The first officer appeared concerned about the weather ahead (perhaps due to his relative inexperience), and wanted to climb, but the aircraft was too heavy. The captain did not appear particularly concerned about the weather, and a few minutes prior to encountering the thunderstorms, he elected to take his crew rest, bringing the relief first officer forward. The relief first officer had quite a bit more experience than the first officer in the right seat. After a short brief, the relief first officer assumed the duties of the pilot in the left seat.

The two first officers discussed the approaching weather. The relief first officer, like the captain, did not appear to be particularly concerned about the line of weather. This would be common for an experienced airline pilot, as crossing such lines of weather at cruise altitude is very common and rarely results in any problems.

When the aircraft entered the line of weather, they immediately experienced turbulence, and then (unknown to the crew) an area of high altitude ice crystals. These ice crystals were of sufficient quantity that they blocked all three of the aircraft’s pitot tubes (utilized for measuring airspeed), causing a significant data loss that resulted in the autopilot disconnecting and the loss of much of the aircraft’s displays, as well as a combination of electronic alert messages as various systems that depended on airspeed.

When the autopilot first disconnected, the aircraft was slightly nose-low and slightly below the target altitude of 35,000 feet. The first officer pulled back a bit on the controls to raise the nose, and over the next 20 seconds, the nose continued to pitch up to about 12 degrees. While this is above the normal pitch angle for the altitude, it is not a significantly high pitch, and, in fact, is significantly lower than the climb attitude after takeoff. As such, it is probably not surprising that the crew was not concerned about the pitch attitude, although they were concerned that the aircraft was climbing. The change of pitch attitude was slow enough that it would not have been particularly salient. The pitot tube blockage resulted in the airspeed indications fluctuating and becoming clearly unreliable.

The aircraft entered an aerodynamic stall approximately 44 seconds after the autopilot disconnect. The crew did not initially react to this, possibly because they may not have recognized the stall. However, as the aircraft started to lose altitude, the handling pilot reacted with full pitch up control commands on the flight control inceptor. While this may appear to be a surprising input, as it would not be the normal response to a stall, there were several factors that led to the scenario. First, due to regulations while flying at cruise altitudes, pilots are no longer allowed to “hand fly” the aircraft, so they have no experience with the handling qualities of the aircraft at that altitude. Second, the Airbus flight controls are designed such that, in normal conditions, full “nose up” command would result in the maximum performance of the aircraft. Unfortunately, due to the loss of airspeed indications, the flight controls had reverted from “normal law” to “alternate law”, resulting in the nose up input exceeding the maximum flight envelope of the aircraft, deepening the aerodynamic stall. The aircraft remained in the aerodynamic stall condition until impacting the ocean. There were no survivors.

Biases involved in this event would include attentional tunneling, expectation bias, confirmation bias, automaticity, optimism bias and overconfidence effect, exacerbated by lack of training. Clearly, as the situation developed, the crew experienced attentional tunneling. The pilots were extremely concerned about getting the captain back up the to the flight deck, and expressed this several times. In addition, they appeared to be worried about the altitude and the electronic alerts and focusing on these issues.

The line of weather was penetrated just prior to the autopilot disconnect. The crew was anticipating turbulence (they had advised the flight attendants of such) and so it is likely they associated the pitch attitude, the shaking associated with the stall buffet and loss of altitude with factors associated with the weather itself, displaying an expectation bias.

The first officer’s nose up input was likely an automaticity response. In training, the response to unusual aircraft situations was to apply full nose-up inputs due to the flight control laws incorporated in the A-330. Unfortunately, that did not apply to this situation.

Once the aircraft stalled, they discounted conflicting cues and held onto the notion that they had lost all of the instruments, exhibiting confirmation bias. This lasted for the next few minutes, but the crew did not have long, as the time from autopilot disconnect to the aircraft hitting the ocean was just approximately four minutes. With altimeters spinning at a rate that blurred the indications, pitch at what would normally be a positive climb attitude and maximum engine power, it is not surprising that the conflicting cues were hard to sort out.

The usual experience of situations turning out well likely resulted in some overconfidence effect, and optimism effect played out in the crews interactions. The relief pilot in the left seat did not forcibly take the controls (although he was more experienced), and this is likely due to a combination of excessive “professional courtesy” (not wanting the right seat first officer to “lose face”) as well as being optimistic that the situation would turn out well (BEA, 2012).

American Airlines 903

On May 12, 1997, and American Airlines A-300 was approaching Miami, Florida. Descending to level flight at 16,000 feet, the crew was cleared into a holding pattern due to air traffic. Precipitation was depicted on the weather radar in the vicinity of the holding point. Perhaps to assist the autothrottles in the descent, the throttle levers had been pulled back below the normal autothrottle minimum limit, which apparently caused the autothrottle system to disengage. As the aircraft approached the holding point at a level altitude, the airspeed started to decay due to lack of engine thrust. The flight crew was concentrating on the weather ahead and expected the ride to get rough as they penetrated the storm (expectation bias).

The aircraft entered the weather, and, at about the same time, also encountered aerodynamic stall buffet. The crew interpreted this buffet as turbulence (expectation bias), and when the aircraft actually stalled, the crew assumed that they had encountered windshear, and applied the windshear escape maneuver procedure. As the aircraft proceeded to buck and shake, the crew believed that they were in a severe windshear event. Fortunately, they were able to recover, however, the crew was not aware that they had stalled the aircraft until informed of that fact later by investigators (NTSB, 1997; Dismukes, Berman, and Loukopoulos. 2007).

USAF C-5 Diego Garcia

More than 20 hours into augmented duty day, the crew of a U.S. Air Force Lockheed C-5 was approaching Diego Garcia. As they configured for landing, they encountered an area of precipitation. While concentrating on the approach and configuration (attentional tunneling), the aircraft continued to slow, with power retarded. The crew likely anticipated some turbulence (expectation bias) and as the aircraft slowed, it started to buffet. The aircraft was descending below the target profile, so they pitched the aircraft up more

(attentional tunneling and confirmation bias), which led to an aerodynamic stall. The aircraft departed controlled flight, bucking wildly. The crew regained control just a few hundred feet off the water, and came around for a safe landing, believing all the while that they had encountered a windshear. It is probable that fatigue also exacerbated the issues facing this crew (USAF, ND).

Delta Airlines 191

On August 2, 1985, a Lockheed L1011, was on approach to the Dallas-Fort Worth airport. Scattered thunderstorms were in the area, which the crew circumnavigated. As the aircraft was vectored to the final approach course, a thunderstorm cell moved to an area just north of the airport, on short final for the runway they were to land on. The crew was highly experienced, and had certainly encountered similar conditions in the past. Optimism bias was likely present in their assessment of the situation, and this was confirmed as the aircraft ahead of them landed without incident. The crew was aware of

the convective weather and rain on the approach, but clearly not the impending microburst (see article here). As in some other accidents, with the combination of plan continuation bias, and in general not being concerned about the issue due to lack of training, this crew continued the approach. The aircraft encountered a microburst, resulting in a significant loss of airspeed.

During the microburst encounter the flying pilot (the first officer) appeared to be focusing on the airspeed (attentional tunneling), at the expense of altitude. The aircraft crashed just short of the runway (NTSB, 1985).

Countermeasures to mitigate bias

Once identified, the hazards of cognitive bias can be mitigated through countermeasures that target the issues directly. These involve changing the way that humans think, and there are only a handful of ways to do that.

Training

Whether the training is initiated at the individual level through personal development, or via formal programs, training is the only way to actually change the root behavioral issues that result in biases. The first defense against any hazard to to understand that the hazard exists.

Education

People working in safety critical positions should be trained to understand the types of cognitive biases that exist, and strategies to avoid them. Thorough understanding allows the individual to see how these biases can affect them, and how dangerous and insidious they can be. In addition, proven methods to identify these problems should be explored and taught. These should be trained until they are understood at the level of recognition primed decision making for issues that require an immediate response, and operators should also be taught when they need to make a more careful decision to avoid the traps (Kahneman and Klein (2009).

Crew Resource Management

One of the most effective and proven strategies in combating perceptual error has been crew resource management (Cooper, G. E., White, M. D., & Lauber, J. K. (Eds), 1980). Through these methods, the other members of the crew (or team) can serve as a safety net to capture errors. If these other individuals are trained to recognize cognitive biases, they will be much more likely to recognize the scenario in real time.

Conclusion, Recommendations and Research

Cognitive bias continues to play a major role in aerospace accidents. Much of the time, those at the “sharp end” are not even aware that they are experiencing these biases and their associated affects on perception and judgment. The accident rate could be reduced through education and training to mitigate these issues. Accident investigators should be trained to identify these issues so that they can be addressed through the formal channels following an accident investigation.

At present time, despite all the research, the degree of understanding of these issues and, more importantly, how to mitigate them, is limited. Research should be conducted to find ways to improve human performance, specifically targeting the factors surrounding cognitive biases.

References

Arkes, H. R., & Blumer, C. (1985). The psychology of sunk cost. Organizational behavior and human decision processes, 35(1), 124-140.

Baron, J., & Frisch, D. (1994). Ambiguous probabilities and the paradoxes of expected utility. Subjective probability, 273-294

Bureau d’Enquêtes et d’Analyses. (2012). Final Report on AF 447. Le Bourget, FR.: BEA.

Chandler, C. C., Greening, L., Robison, L. J., & Stoppelbein, L. (1999). It can’t happen to me … or can it? conditional base rates affect subjective probability judgments. Journal of Experimental Psychology: Applied, 5(4), 361-378. doi: http://dx.doi.org/10.1037/1076-898X.5.4.361

Carroll, J. S. (1978). The effect of imagining an event on expectations for the event: An interpretation in terms of the availability heuristic. Journal of experimental social psychology, 14(1), 88-96

Commercial Aviation Safety Team (2003). Cast Plan and Metrics 09-29-03. Retrieved from https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&cad=rja&ved=0CDMQFjAA&url=http%3A%2F%2Fwww.icao.int%2Ffsix%2Fcast%2FCAST%2520Plan%2520and%2520Metrics%25209-29-03.ppt&ei=DG5oUbm5CJCC9QTS2IAg&usg=AFQjCNE33DVJr9hOL6w8A4sVYgm9zVooyQ&sig2=5N43hFFDr2vqX7QYXvrEdg&bvm=bv.45175338,d.eWU

DeJoy, D. M. (1989). The optimism bias and traffic accident risk perception.Accident Analysis & Prevention, 21(4), 333-340.

Cooper, G. E., White, M. D., & Lauber, J. K. (Eds). (1980). Resource management on the flightdeck: Proceedings of a NASA/industry workshop (NASA CP-2120). Moffett Field, CA: NASA-Ames Research Center.

Dismukes, R.K., Berman, B., and Loukopoulos, L. (2007). The Limits of Expertise. Burlington, VT: Ashgate.

Dodhia, R.M. and Dismukes, R.K. (2008). Interruptions Create Prospective Memory Tasks. Applied Cognitive Psychology. 23: 73-89 (2009).

Dunning, D., & Story, A. L. (1991). Depression, realism, and the overconfidence effect: Are the sadder wiser when predicting future actions and events? Journal of Personality and Social Psychology, 61(4), 521-532. doi: http://dx.doi.org/10.1037/0022-3514.61.4.521

Goldstein, E.B. (2010). Sensation and Perception, Eighth Edition. Belmont, CA: Wadsworth

Hoffrage, U., Hertwig, R., & Gigerenzer, G. (2000). Hindsight bias. Journal of Experimental Psychology: Learning, Memory and Cognition [PsycARTICLES], 26(3), 566-566. doi:http://dx.doi.org/10.1037/0278-7393.26.3.566

Jeng, M. (2006). A selected history of expectation bias in physics. American journal of physics, 74, 578.

Kahneman, D. (2011). Thinking Fast and Slow. New York: Ferrar, Strauss and Giroux.

Kahneman, D., and Klein, G. (2009). Conditions for Intuitive Expertise: A Failure to Disagree. American Psychologist. 64(6), 515-526.

Kuran, T., & Sunstein, C. R. (1999). Availability cascades and risk regulation.Stanford Law Review, 683-768.

MacLeod, C., Mathews, A., & Tata, P. (1986). Attentional bias in emotional disorders. Journal of abnormal psychology, 95(1), 15-20

Mahajan, J. (1992). The overconfidence effect in marketing management predictions. JMR, Journal of Marketing Research, 29(3), 329-329. Retrieved from http://search.proquest.com/docview/235228569?accountid=27313

Massad, C. M., Hubbard, M., & Newtson, D. (1979). Selective perception of events. Journal of Experimental Social Psychology, 15(6), 513-532.

McCoy, C. E., & Mickunas, A. (2000, July). The role of context and progressive commitment in plan continuation error. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting (Vol. 44, No. 1, pp. 26-29). SAGE Publications.

Moore, D. A., & Small, D. A. (2007). Error and bias in comparative judgment: On being both better and worse than we think we are. Journal of Personality and Social Psychology, 92(6), 972-989. doi:http://dx.doi.org/10.1037/0022-3514.92.6.972

National Transportation Safety Board. (1972). Aircraft Accident Report, AAR-73-14. Washington, D.C.: NTSB

National Transportation Safety Board. (1985). Brief of Accident, DCA97MA049. Washington, D.C.: NTSB

National Transportation Safety Board. (1997). Aircraft Accident Report. AAR-86/05. Washington, D.C.: NTSB

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2(2), 175

Pascual, R., Mills, M., & Henderson, S. (2001). Training and technology for teams. IEE CONTROL ENGINEERING SERIES, 133-149.

Schwarz, N., Bless, H., Strack, F., Klumpp, G., Rittenauer-Schatka, H., & Simons, A. (1991). Ease of retrieval as information: Another look at the availability heuristic. Journal of Personality and Social psychology, 61(2), 195-202.

Thomas P. Harding, Psychiatric disability and clinical decision making: The impact of judgment error and bias, Clinical Psychology Review, Volume 24, Issue 6, October 2004, Pages 707-729, ISSN 0272-7358, 10.1016/j.cpr.2004.06.003. (http://www.sciencedirect.com/science/article/pii/S0272735804000807)

West, R., Krompinger, J., & Bowry, R. (2005). Disruptions of preparatory attention contribute to failures of prospective memory. Psychonomic Bulletin & Review (Pre-2011), 12(3), 502-7. Retrieved from http://search.proquest.com/docview/204940379?accountid=27313

USAF. (ND). C-5 Stall Incident – Diego Garcia, F3214-VTC-98-0017 PIN# 613554 http://www.youtube.com/watch?v=Tqo8Fq6zz3M

Wickens, C. D., & Alexander, A. L. (2009). Attentional Tunneling and Task Management in Synthetic Vision Displays. International Journal Of Aviation Psychology, 19(2), 182-199. doi:10.1080/10508410902766549

=============================================

Pingback: Prejuicios Heurísticos « Ramiro Fuentes

Pingback: High Altitude Stalls – how well do you understand them? | Air Transport Safety Articles

Pingback: A probabilistic world | Air Transport Safety Articles

Pingback: Did mental bias play a role in Air Canada’s SFO near-miss? | Binkily

Pingback: Did brain phenomenon contribute to Air Canada pilot’s close-call at SFO? - NewsAddUp

Pingback: Did mental bias play a role in Air Canada’s SFO near-miss? | NewsRains.com